Data Disaggregation

Disaggregated data analysis in Social Media

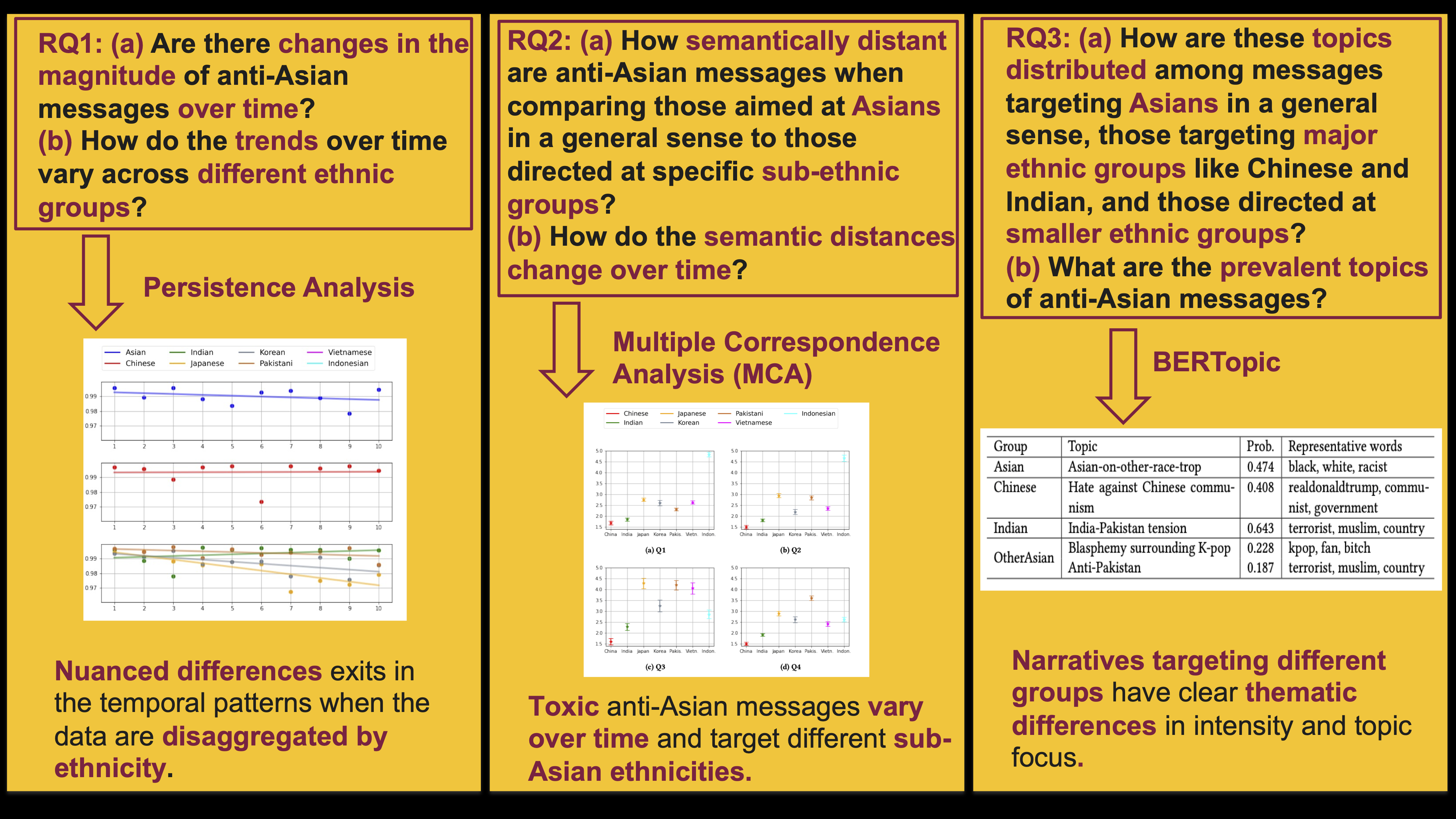

Recent policy initiatives have acknowledged the importance of disaggregating data pertaining to diverse Asian ethnic communities to gain a more comprehensive understanding of their current status and to improve their overall well-being. However, research on anti-Asian racism has thus far fallen short of properly incorporating data disaggregation practices. Our study addresses this gap by collecting 12-month-long data from X (formerly known as Twitter) that contain diverse sub-ethnic group representations within Asian communities. In this dataset, we break down anti-Asian toxic messages based on both temporal and ethnic factors and conduct a series of comparative analyses of toxic messages, targeting different ethnic groups. Using temporal persistence analysis, 𝑛-gram-based correspondence analysis, and topic modeling, this study provides compelling evidence that anti-Asian messages comprise various distinctive narratives. Certain messages targeting sub-ethnic Asian groups entail different topics that distinguish them from those targeting Asians in a generic manner or those aimed at major ethnic groups, such as Chinese and Indian. By introducing several techniques that facilitate comparisons of online anti-Asian hate towards diverse ethnic communities, this study highlights the importance of taking a nuanced and disaggregated approach for understanding racial hatred to formulate effective mitigation strategies.

Reference: Fan, et al, “Not All Asians are the Same: A Disaggregated Approach to Identifying Anti-Asian Racism in Social Media,” WWW ‘24: Proceedings of the ACM Web Conference 2024. [Paper][3-min video summary]

On-going project: Disaggregated data analysis in large language models (LLMs)

This project investigates how equitable data mining can enhance large language models (LLMs) to better serve ethnic minorities. The approach involves creating specialized datasets that reflect the unique needs and contexts of different ethnic communities, aiming to reduce biases often present in AI outputs. The work progresses through multiple stages, from developing improved AI prompts to evaluating how well the system tailors scientific information for specific communities. Community experts will help assess the system’s inclusivity, and the project will also provide public education on fair data practices in AI.

This research activity is funded by National Science Foundation under the award, CNS-2210137, titled, “EAGER: DCL: SaTC: Enabling Interdisciplinary Collaboration: Combatting Disinformation and Racial Bias: A Deep-Learning-Assisted Investigation of Temporal Dynamics of Disinformation” [Award Description]